Setting Up Bubble.io to Work with the OpenAI Platform

Setting Up Bubble.io to Work with the OpenAI Platform

This article is the first in a series on how to integrate Bubble.io with the OpenAI platform. Although there are free plugins available, they all lack clear instructions on how to use the API calls or integrate OpenAI’s platform effectively. You actually don’t need to use them if you understand the order of the calls and how to make them work (maybe that’s why the plugins are free!).

Here, we’ll start with the setup process—configuring the key API calls to connect Bubble.io with OpenAI, and the basic steps to chat with an assistant and retrieve a response. This setup will lay the groundwork for building AI-driven features in your Bubble app.

Future articles will cover how to use these setups to create intelligent workflows and manage conversations efficiently.

Setting Up Bubble’s API Plugin

To start using OpenAI within Bubble, we need to configure Bubble’s API plugin. This setup will allow us to send requests to OpenAI, receive responses, and ultimately manage a conversational flow within our Bubble application.

Step 1: Install the API Plugin

- In your Bubble editor, go to the Plugins tab and search for the API Connector plugin.

- Click Install to add it to your project.

Step 2: Configure OpenAI as an API

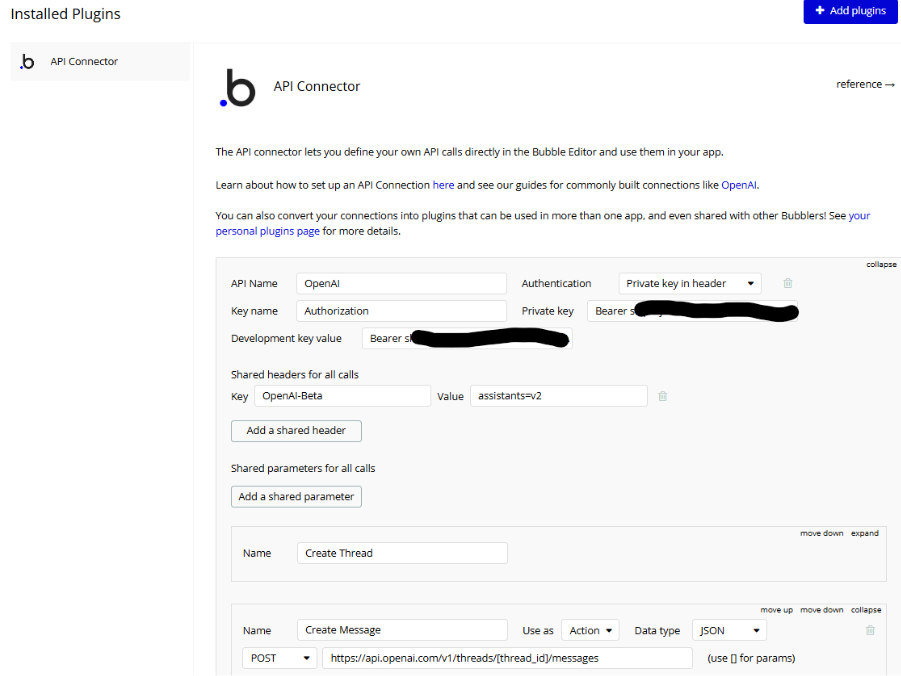

- After installing the API Connector, click on it to access the settings.

- Click “Add another API” to add a new API and name it “OpenAI” or any descriptive name of your choice.

- Under Authentication, select Private key in header. Set Key name to Authorization and Private key to Bearer [your API key] to authenticate requests to OpenAI. Copy this value into Development key value as well.

- Add a shared Header with the name OpenAI-Beta and value assistants=v2 to enable the use of assistants (Assistants are the preferred method of interacting with the OpenAI platform).

Step 3: Set Up the API Call for Starting a Thread

- Add an API Call within the OpenAI API setup. Name it something like “Start Thread” to keep things organised.

- Ensure the call is visible in workflows by setting Use as to “Action”.

- Use the POST method, as we’ll be sending a request to OpenAI to start a new conversation thread.

- Enter OpenAI’s API endpoint URL for starting a conversation.

Step 4: Test the API Call

- Use the Initialize Call button to test if Bubble can successfully connect with OpenAI. If successful, Bubble will display a sample response structure, which you can save to ensure data types are set correctly in your app.

- This response structure will help you later when setting up workflows that manage the conversation flow.

Step 5: Configure JSON Settings

- Note: When creating a thread, there is no body required.

- For other calls that require a body, scroll to the Body section to enter the JSON structure needed for requests like sending messages or starting runs. Parameters can be added to the body by using <parameter>. Ensure parameters are visible by unticking the “private” checkbox.

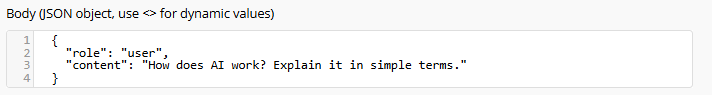

- messages: When sending messages, use examples like:

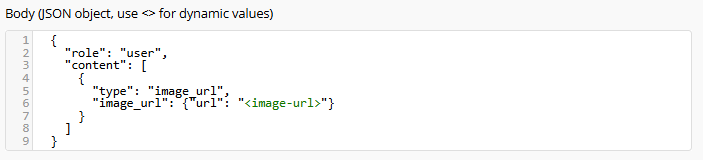

- Or, if sending an image URL for the assistant to process:

- Make sure the JSON format matches OpenAI’s requirements, as errors in formatting can prevent the call from executing correctly.

This initial setup gets the API connection ready. With the API Connector properly configured, we’re now set to start threads, manage messages, and request responses from OpenAI directly in Bubble.

Interacting with OpenAI Through Bubble

With the API plugin configured, we now need to set up separate API calls for each interaction with OpenAI. This includes creating a message, starting a run, and retrieving the result of that run. To streamline the process, we’ll use a repeatable approach for each call.

Setting Up the Required API Calls

Create Message

- Endpoint: Create Message API

- Method: POST

- Use As: Action

- URL: https://api.openai.com/v1/threads/[thread_id]/messages

- Ensure the thread_id is not private

- Find an example thread id from https://platform.openai.com/threads/

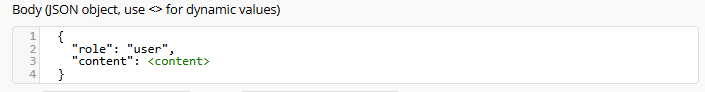

- Body: Include content which contains the user’s message.

- Example JSON Body:

- Note: For <content> in the JSON body, ensure you untick the “private” checkbox in the API Connector settings to make it usable in workflows.

Create Run

- Endpoint: Create Run API

- Method: POST

- Use As: Action

- URL: https://api.openai.com/v1/threads/[thread_id]/runs

- ensure the thread_id parameter is not private

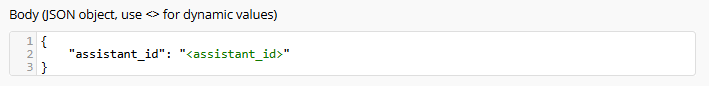

- Body: Use assistant_id from the OpenAI platform (https://platform.openai.com/assistants) to initiate the run that will generate a response.

- Example JSON Body:

- Note: when testing, save the run id for the next call.

Retrieve Run

- Endpoint: Retrieve Run API

- Method: GET

- URL: https://api.openai.com/v1/threads/[thread_id]/runs/[run_id]

- Use As: Action

- Parameters: Use thread_id and run_id to get the status and result of the run.

Retrieve Messages

- Endpoint: List Messages API

- Method: GET

- URL: https://api.openai.com/v1/threads/[thread_id]/messages

- Use As: Action

- Parameters: Use thread_id to get the status and result of the run.

Full Workflow Overview

From start to end, the following actions will be called:

- Create Thread: Start by creating a new conversation thread to initiate communication.

- Send Message(s): Add one or more messages to the conversation thread to build the context.

- Start Run: Initiate a run to process the conversation and generate a response from OpenAI.

- Retrieve Run: Poll periodically to retrieve the run status until it is marked as “complete.”

- Retrieve Messages: When the Run is complete, we can retrieve the message list, which will contain the response.

Threads are the Assistant’s “Memory”, so if we’re using an assistant, we don’t need to continuously send back the chat history. Bear in mind that threads also have their own context window, so we need to create new threads for each new conversation.

However, we can continue conversations with old threads pretty much whenever we want.

That’s it for now

This is the base set of calls required to get things done with OpenAI’s platform from Bubble. The next article will provide an example of using these calls in workflows to build a functional, AI-driven application.